The more advanced ransomware actors nowadays are “smart” enough to destroy your backups prior to leveraging your important data for ransom payments. As such, we highly recommend storing redundant copies of your backups, of which at least one copy is only accessible after MFA, combined with very strict access control. This can be achieved with for example cloud storage providers.

Azure as well as AWS have some kind of protection against accidental deletions of files on their backups/storage services. And even though some claim that they have protection against malicious deletion, an attacker with high enough privileges can disable all protections and still delete it. Yet, the advantage over storing your backups in the cloud opposed to only storing it locally is MFA. Most companies store their backups on a hardened server, but a compromised DA account will almost always give your access to the backups. Storing an additional copy of your backups in hardened cloud storage allows you to enforce MFA. And even though a DA might be able to obtain Global Admin privileges in your Azure tenant and bypass MFA requirements, it is significantly harder for the attacker and hence worth the effort of implementation.

Since full protection against an attacker deleting backups is not an option, it is important to at the very least be notified if someone starts messing around with your backups. Unfortunately, even this is not 100% covering as in a fully compromised environment an attacker can delete these monitoring rules. But this would constitute more to the MO for nation state actors and less for ransomware actors.

Azure Backup

Azure backup is the main Microsoft solution for storing your backups in Azure. It has a lot of features which we won’t look into. The main feature we’re interested in is how an attacker can destroy backups. There are roughly three ways:

- Deleting the whole backup solution. This risk can be limited by using a resource lock.

- Deleting certain backups. This risk can be limited by using the “soft delete” functionality.

- Removing a certain machine from the backup process. This risk should be covered in your business as usual operational monitoring.

Deleting a resource lock

A resource lock allows an admin to prevent the deletion of a resource by authorised users. By default, only the role of “Owner” and “User Access Administrator” can delete a resource lock. You can add a “cannot-delete” resource lock on the BackupVault to prevent deletion of the BackupVault. Word of caution: do not put the lock on the resourcegroup. This will break your backups, as Azure cannot delete the older backups anymore.

If an attacker obtains “Owner”, “User Access Administrator” or “Global Administrator” privileges, the attacker can still delete the lock. You can monitor for this deletion with this rule in our FalconFriday repository.

Disabling “soft delete”

By default the “soft delete” feature is enabled which makes backups recoverable for 14 days after deletion. It is important to note that this protection is only for certain backup types! We assume you’re using a backup type which does support “soft delete”.

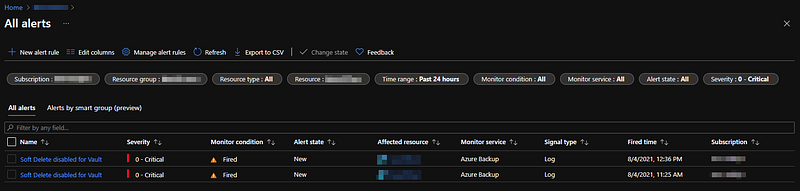

An attacker who wants to properly delete your backups, will first disable “soft delete” and then delete the backed-up items. Unfortunately, the logs provided by Azure don’t explicitly indicate that the “soft delete” feature has been disabled. You can only see that some configuration has changed. So even though this is far from perfect, in a production environment you can detect changes in the backup config and have some playbooks for an analyst to check if “soft delete” versioning is still in place. Moreover, you can add checking the alerts inside the Azure recovery services to your playbook. As far as we know, these are only exposed in the UI and not visible in Sentinel/Log Analytics. Alternatively, it is possible to receive these alerts by email. You can find the query here.

Removing a “backup item”

An attacker can finally delete a so called “backup item”. This basically deletes all backups for a specific machine and offboards the machine from Azure backup protection. Luckily, this can be detected fairly easy with this rule.

Azure Storage

In Azure Storage, you have two main ways of storing your backups. Using Azure Storage directly is a less polished method for performing backups and is meant for more customised solutions. It only provides storage, but no other “bells and whistles” are provided to complement your backup solution (such as retention policies, etc.).

You can choose to store a copy of your backups using “Azure Containers” or “Azure File shares”. To provide maximum protection, you can use the “immutable storage” solution for Azure containers. In case you don’t need such a rigid solution, but still want to have some kind of protection, you can opt to use “soft delete”, “versioning” and “cannot-delete lock”.

For Azure storage, we have the same limitations as for Azure Backups. The deletion of a “cannot-delete lock” can be detected with the same query as for Azure Backups. This is a generic query which works for all resource types. We can’t see the details of modifications for “soft delete” and versioning; however, we can see that some of these properties have been changed. You need to cover these checks manually in your playbooks in case such a detection rule triggers. The rule to detect changes in “Containers” or “File shares” can be found here.

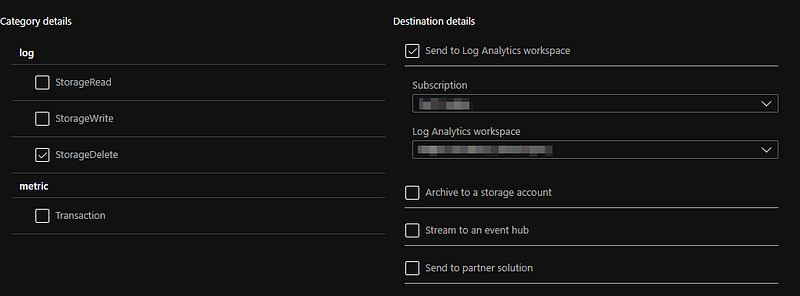

Finally, you want to detect the deletion of files from storage. This is to protect you against the “clever” attack of just deleting the file, breaking the backup procedure and waiting until the retention period has passed. In order to do so, you need to enable diagnostic logging. This is disabled by default. You need to enable (at least) the “StorageDelete” events for the type of storage you use (i.e. Container vs File Share). See screenshot below.

Once you added this logging for your Container or your File Share, you can use these queries from our FalconFriday repository to detect file deletions.

Regardless whether you use immutable storage or not, the queries which detect an attempted delete operation on a file are useful indicators of a compromise.

Note that the “soft delete” feature for File Shares applies on a share level, not on file level. To create file-level protection, you need to use Azure Backup to protect the files. When using containers for storage, the “soft delete” feature is on container level as well as on “blob/file level”. Please be sure to check these features before relying on this blog post as these feature are subject to change.

Knowledge center

Other articles

SOAPHound — tool to collect Active Directory data via ADWS

[dsm_breadcrumbs show_home_icon="off" separator_icon="K||divi||400" admin_label="Supreme Breadcrumbs" _builder_version="4.18.0" _module_preset="default" items_font="||||||||" items_text_color="rgba(255,255,255,0.6)" custom_css_main_element="color:...

FalconHound, attack path management for blue teams

[dsm_breadcrumbs show_home_icon="off" separator_icon="K||divi||400" admin_label="Supreme Breadcrumbs" _builder_version="4.18.0" _module_preset="default" items_font="||||||||" items_text_color="rgba(255,255,255,0.6)" custom_css_main_element="color:...

Microsoft Defender for Endpoint Internals 0x05 — Telemetry for sensitive actions

[dsm_breadcrumbs show_home_icon="off" separator_icon="K||divi||400" admin_label="Supreme Breadcrumbs" _builder_version="4.18.0" _module_preset="default" items_font="||||||||" items_text_color="rgba(255,255,255,0.6)" custom_css_main_element="color:...

Together. Secure. Today.

Stay in the loop and sign up to our newsletter

FalconForce realizes ambitions by working closely with its customers in a methodical manner, improving their security in the digital domain.

Energieweg 3

3542 DZ Utrecht

The Netherlands

FalconForce B.V.

[email protected]

(+31) 85 044 93 34

KVK 76682307

BTW NL860745314B01